12. Data integration#

12.1. Motivation#

A central challenge in most scRNA-seq data analyses is presented by batch effects. Batch effects are changes in measured expression levels that are the result of handling cells in distinct groups or “batches”. For example, a batch effect can arise if two labs have taken samples from the same cohort, but these samples are dissociated differently. If Lab A optimizes its dissociation protocol to dissociate cells in the sample while minimizing the stress on them, and Lab B does not, then it is likely that the cells in the data from the group B will express more stress-linked genes (JUN, JUNB, FOS, etc. see [van den Brink et al., 2017]) even if the cells had the same profile in the original tissue. In general, the origins of batch effects are diverse and difficult to pin down. Some batch effect sources might be technical such as differences in sample handling, experimental protocols, or sequencing depths, but biological effects such as donor variation, tissue, or sampling location are also often interpreted as a batch effect [Luecken et al., 2021]. Whether or not biological factors should be considered batch effects can depend on the experimental design and the question being asked. Removing batch effects is crucial to enable joint analysis that can focus on finding common structure in the data across batches and enable us to perform queries across datasets. Often it is only after removing these effects that rare cell populations can be identified that were previously obscured by differences between batches. Enabling queries across datasets allows us to ask questions that could not be answered by analysing individual datasets, such as Which cell types express SARS-CoV-2 entry factors and how does this expression differ between individuals? [Muus et al., 2021].

When removing batch effects from omics data, one must make two central choices: (1) the method and parameterization, and (2) the batch covariate. As batch effects can arise between groupings of cells at different levels (i.e., samples, donors, datasets etc.), the choice of batch covariate indicates which level of variation should be retained and which level removed. The finer the batch resolution, the more effects will be removed. However, fine batch variation is also more likely to be confounded with biologically meaningful signals. For example, samples typically come from different individuals or different locations in the tissue. These effects may be meaningful to inspect. Thus, the choice of batch covariate will depend on the goal of your integration task. Do you want to see differences between individuals, or are you focused on common cell type variation? An approach to batch covariate selection based on quantitative analyses was pioneered in a recent effort to build an integrated atlas of the human lung, where the variance attributable to different technical covariates was used to make this choice [Sikkema et al., 2022].

12.1.1. Types of integration models#

Methods that remove batch effects in scRNA-seq are typically composed of (up to) three steps:

Dimensionality reduction

Modeling and removing the batch effect

Projection back into a high-dimensional space

While modeling and removing the batch effect (Step 2) is the central part of any batch removal method, many methods first project the data to a lower dimensional space (Step 1) to improve the signal-to-noise ratio (see the dimensionality reduction chapter) and perform batch correction in that space to achieve better performance (see [Luecken et al., 2021]). In the third step, a method may project the data back into the original high-dimensional feature space after removing the fitted batch effect to output a batch-corrected gene expression matrix.

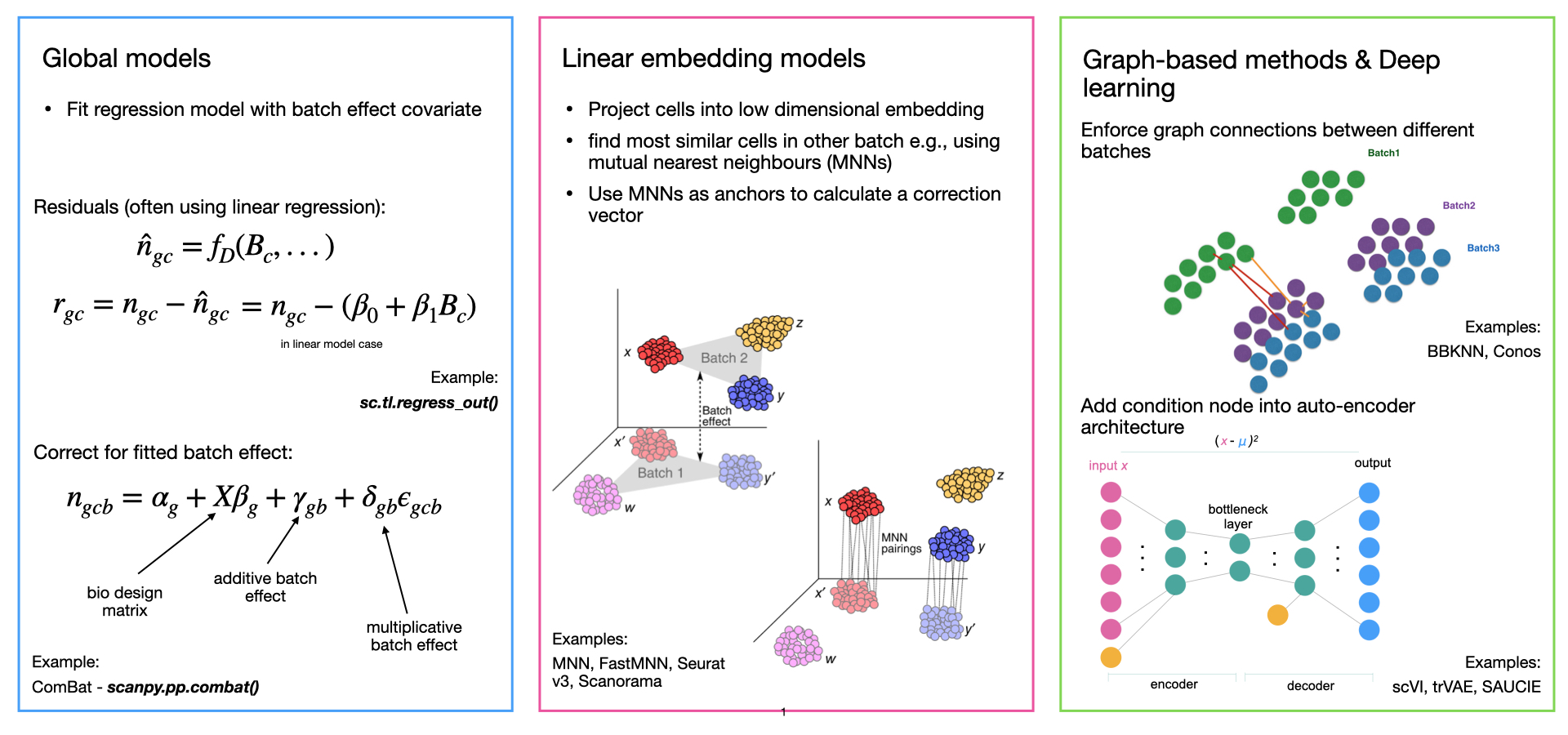

Batch-effect removal methods can vary in each of these three steps. They may use various linear or non-linear dimensionality reduction approaches, linear or non-linear batch effect models, and they may output different formats of batch-corrected data. Overall, we can divide methods for batch effect removal into 4 categories. In their order of development, these are global models, linear embedding models, graph-based methods, and deep learning approaches (Fig I1).

Global models originate from bulk transcriptomics and model the batch effect as a consistent (additive and/or multiplicative) effect across all cells. A common example is ComBat [Johnson et al., 2007].

Linear embedding models were the first single-cell-specific batch removal methods. These approaches often use a variant of singular value decomposition (SVD) to embed the data, then look for local neighborhoods of similar cells across batches in the embedding, which they use to correct the batch effect in a locally adaptive (non-linear) manner. Methods often project the data back into gene expression space using the SVD loadings, but may also only output a corrected embedding. This is the most common group of methods and prominent examples include the pioneering mutual nearest neighbors (MNN) method [Haghverdi et al., 2018] (which does not perform any dimensionality reduction), Seurat integration [Butler et al., 2018, Stuart et al., 2019], Scanorama [Hie et al., 2019], FastMNN [Haghverdi et al., 2018], and Harmony [Korsunsky et al., 2019].

Graph-based methods are typically the fastest methods to run. These approaches use a nearest-neighbor graph to represent the data from each batch. Batch effects are corrected by forcing connections between cells from different batches and then allowing for differences in cell type compositions by pruning the forced edges. The most prominent example of these approaches is the Batch-Balanced k-Nearest Neighbor (BBKNN) method [Polański et al., 2019].

Deep learning (DL) approaches are the most recent, and most complex methods for batch effect removal that typically require the most data for good performance. Most deep learning integration methods are based on autoencoder networks, and either condition the dimensionality reduction on the batch covariate in a conditional variational autoencoder (CVAE) or fit a locally linear correction in the embedded space. Prominent examples of DL methods are scVI [Lopez et al., 2018], scANVI [Xu et al., 2021], and scGen [Lotfollahi et al., 2019].

Some methods can use cell identity labels to provide the method with a reference for what biological variation should not be removed as a batch effect. As batch-effect removal is typically a preprocessing task, such approaches may not be applicable to many integration scenarios as labels are generally not yet available at this stage.

More detailed overviews of batch-effect removal methods can be found in [Argelaguet et al., 2021] and [Luecken et al., 2021].

Fig. I1: Overview of different types of integration methods with examples.

Fig. I1: Overview of different types of integration methods with examples.

12.1.2. Batch removal complexity#

The removal of batch effects in scRNA-seq data has previously been divided into two subtasks: batch correction and data integration [Luecken and Theis, 2019]. These subtasks differ in the complexity of the batch effect that must be removed. Batch correction methods deal with batch effects between samples in the same experiment where cell identity compositions are consistent, and the effect is often quasi-linear. In contrast, data integration methods deal with complex, often nested, batch effects between datasets that may be generated with different protocols and where cell identities may not be shared across batches. While we use this distinction here we should note that these terms are often used interchangeably in general use. Given the differences in complexity, it is not surprising that different methods have been benchmarked as being optimal for these two subtasks.

12.1.3. Comparisons of data integration methods#

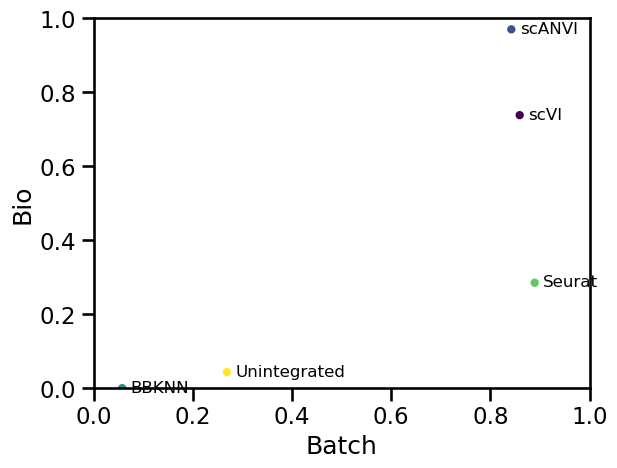

Several benchmarks have previously evaluated the performance of methods for batch correction and data integration. When removing batch effects, methods may overcorrect and remove meaningful biological variation in addition to the batch effect. For this reason, it is important that integration performance is evaluated by considering both batch effect removal and the conservation of biological variation.

The k-nearest-neighbor Batch-Effect Test (kBET) was the first metric for quantifying batch correction of scRNA-seq data [Büttner et al., 2019]. Using kBET, the authors found that ComBat outperformed other approaches for batch correction while comparing predominantly global models. Building on this, two recent benchmarks [Tran et al., 2020] and [Chazarra-Gil et al., 2021] also benchmarked linear-embedding and deep-learning models on batch correction tasks with few batches or low biological complexity. These studies found that the linear-embedding models Seurat [Butler et al., 2018, Stuart et al., 2019] and Harmony [Korsunsky et al., 2019] performed well for simple batch correction tasks.

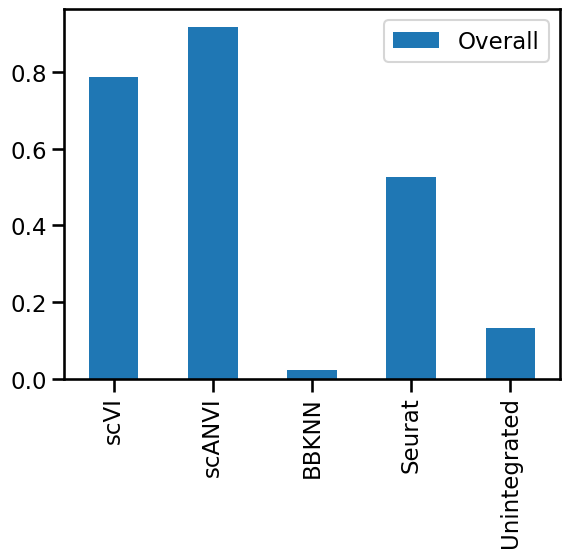

Benchmarking complex integration tasks poses additional challenges due to both the size and number of datasets as well as the diversity of scenarios. Recently, a large study used 14 metrics to benchmark 16 methods across integration method classes on five RNA tasks and two simulations [Luecken et al., 2021]. While top-performing methods per task differed, approaches that use cell type labels performed better across tasks. Furthermore, deep learning approaches scANVI (with labels), scVI, and scGen (with labels), as well as the linear embedding model Scanorama, performed best, particularly on complex tasks, while Harmony performed well on less complex tasks. A similar benchmark performed for the specific purpose of integrating retina datasets to build an ocular mega-atlas also found that scVI outperformed other methods [Swamy et al., 2021].

12.1.4. Choosing an integration method#

While integration methods have now been extensively benchmarked, an optimal method for all scenarios does not exist. Packages of integration performance metrics and evaluation pipelines like scIB and batchbench can be used to evaluate integration performance on your own data. However many metrics (particularly those that measure the conservation of biological variation) require ground-truth cell identity labels. Parameter optimization may tune many methods to work for particular tasks, yet in general, one can say that Harmony and Seurat consistently perform well for simple batch correction tasks, and scVI, scGen, scANVI, and Scanorama perform well for more complex data integration tasks. When choosing a method, we would recommend looking into these options first. Additionally, integration method choice may be guided by the required output data format (i.e., do you need corrected gene expression data or does an integrated embedding suffice?). It would be prudent to test multiple methods and evaluate the outputs on the basis of quantitative definitions of success before selecting one. Extensive guidelines for data integration method choice can be found in [Luecken et al., 2021].

In the rest of this chapter, we demonstrate some of the best-performing methods and quickly demonstrate how integration performance can be evaluated.

# Python packages

import anndata2ri

import bbknn

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import scanpy as sc

import scib

import scvi

# R interface

from rpy2.robjects import pandas2ri

pandas2ri.activate()

anndata2ri.activate()

%load_ext rpy2.ipython

Global seed set to 0

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/pytorch_lightning/utilities/warnings.py:53: LightningDeprecationWarning: pytorch_lightning.utilities.warnings.rank_zero_deprecation has been deprecated in v1.6 and will be removed in v1.8. Use the equivalent function from the pytorch_lightning.utilities.rank_zero module instead.

new_rank_zero_deprecation(

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/pytorch_lightning/utilities/warnings.py:58: LightningDeprecationWarning: The `pytorch_lightning.loggers.base.rank_zero_experiment` is deprecated in v1.7 and will be removed in v1.9. Please use `pytorch_lightning.loggers.logger.rank_zero_experiment` instead.

return new_rank_zero_deprecation(*args, **kwargs)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/rpy2/robjects/pandas2ri.py:263: DeprecationWarning: The global conversion available with activate() is deprecated and will be removed in the next major release. Use a local converter.

warnings.warn('The global conversion available with activate() '

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/rpy2/robjects/numpy2ri.py:205: DeprecationWarning: The global conversion available with activate() is deprecated and will be removed in the next major release. Use a local converter.

warnings.warn('The global conversion available with activate() '

%%R

# R packages

library(Seurat)

WARNING: The R package "reticulate" does not

consider that it could be called from a Python process. This

results in a quasi-obligatory segfault when rpy2 is evaluating

R code using it. On the hand, rpy2 is accounting for the

fact that it might already be running embedded in a Python

process. This is why:

- Python -> rpy2 -> R -> reticulate: crashes

- R -> reticulate -> Python -> rpy2: works

The issue with reticulate is tracked here:

https://github.com/rstudio/reticulate/issues/208

12.2. Dataset#

The dataset we will use to demonstrate data integration contains several samples of bone marrow mononuclear cells. These samples were originally created for the Open Problems in Single-Cell Analysis NeurIPS Competition 2021 [Lance et al., 2022, Luecken et al., 2022]. The 10x Multiome protocol was used which measures both RNA expression (scRNA-seq) and chromatin accessibility (scATAC-seq) in the same cells. The version of the data we use here was already pre-processed to remove low-quality cells.

Let’s read in the dataset using scanpy to get an AnnData object.

!wget https://figshare.com/ndownloader/files/45452260 -O openproblems_bmmc_multiome_genes_filtered.h5ad

adata_raw = sc.read_h5ad("openproblems_bmmc_multiome_genes_filtered.h5ad")

adata_raw.layers["logcounts"] = adata_raw.X

adata_raw

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/h5ad.py:238: OldFormatWarning: Element '/layers' was written without encoding metadata.

d[k] = read_elem(f[k])

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:590: OldFormatWarning: Element '/obs/GEX_pct_counts_mt' was written without encoding metadata.

return read_elem(dataset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:590: OldFormatWarning: Element '/obs/GEX_n_counts' was written without encoding metadata.

return read_elem(dataset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:590: OldFormatWarning: Element '/obs/GEX_n_genes' was written without encoding metadata.

return read_elem(dataset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:590: OldFormatWarning: Element '/obs/GEX_size_factors' was written without encoding metadata.

return read_elem(dataset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:584: OldFormatWarning: Element '/obs/__categories/GEX_phase' was written without encoding metadata.

categories = read_elem(categories_dset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:587: OldFormatWarning: Element '/obs/GEX_phase' was written without encoding metadata.

read_elem(dataset), categories, ordered=ordered

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:590: OldFormatWarning: Element '/obs/ATAC_nCount_peaks' was written without encoding metadata.

return read_elem(dataset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:590: OldFormatWarning: Element '/obs/ATAC_atac_fragments' was written without encoding metadata.

return read_elem(dataset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:590: OldFormatWarning: Element '/obs/ATAC_reads_in_peaks_frac' was written without encoding metadata.

return read_elem(dataset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:590: OldFormatWarning: Element '/obs/ATAC_blacklist_fraction' was written without encoding metadata.

return read_elem(dataset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:590: OldFormatWarning: Element '/obs/ATAC_nucleosome_signal' was written without encoding metadata.

return read_elem(dataset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:584: OldFormatWarning: Element '/obs/__categories/cell_type' was written without encoding metadata.

categories = read_elem(categories_dset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:587: OldFormatWarning: Element '/obs/cell_type' was written without encoding metadata.

read_elem(dataset), categories, ordered=ordered

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:584: OldFormatWarning: Element '/obs/__categories/batch' was written without encoding metadata.

categories = read_elem(categories_dset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:587: OldFormatWarning: Element '/obs/batch' was written without encoding metadata.

read_elem(dataset), categories, ordered=ordered

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:590: OldFormatWarning: Element '/obs/ATAC_pseudotime_order' was written without encoding metadata.

return read_elem(dataset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:590: OldFormatWarning: Element '/obs/GEX_pseudotime_order' was written without encoding metadata.

return read_elem(dataset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:584: OldFormatWarning: Element '/obs/__categories/Samplename' was written without encoding metadata.

categories = read_elem(categories_dset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:587: OldFormatWarning: Element '/obs/Samplename' was written without encoding metadata.

read_elem(dataset), categories, ordered=ordered

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:584: OldFormatWarning: Element '/obs/__categories/Site' was written without encoding metadata.

categories = read_elem(categories_dset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:587: OldFormatWarning: Element '/obs/Site' was written without encoding metadata.

read_elem(dataset), categories, ordered=ordered

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:584: OldFormatWarning: Element '/obs/__categories/DonorNumber' was written without encoding metadata.

categories = read_elem(categories_dset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:587: OldFormatWarning: Element '/obs/DonorNumber' was written without encoding metadata.

read_elem(dataset), categories, ordered=ordered

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:584: OldFormatWarning: Element '/obs/__categories/Modality' was written without encoding metadata.

categories = read_elem(categories_dset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:587: OldFormatWarning: Element '/obs/Modality' was written without encoding metadata.

read_elem(dataset), categories, ordered=ordered

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:590: OldFormatWarning: Element '/obs/VendorLot' was written without encoding metadata.

return read_elem(dataset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:590: OldFormatWarning: Element '/obs/DonorID' was written without encoding metadata.

return read_elem(dataset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:590: OldFormatWarning: Element '/obs/DonorAge' was written without encoding metadata.

return read_elem(dataset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:590: OldFormatWarning: Element '/obs/DonorBMI' was written without encoding metadata.

return read_elem(dataset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:584: OldFormatWarning: Element '/obs/__categories/DonorBloodType' was written without encoding metadata.

categories = read_elem(categories_dset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:587: OldFormatWarning: Element '/obs/DonorBloodType' was written without encoding metadata.

read_elem(dataset), categories, ordered=ordered

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:584: OldFormatWarning: Element '/obs/__categories/DonorRace' was written without encoding metadata.

categories = read_elem(categories_dset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:587: OldFormatWarning: Element '/obs/DonorRace' was written without encoding metadata.

read_elem(dataset), categories, ordered=ordered

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:584: OldFormatWarning: Element '/obs/__categories/Ethnicity' was written without encoding metadata.

categories = read_elem(categories_dset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:587: OldFormatWarning: Element '/obs/Ethnicity' was written without encoding metadata.

read_elem(dataset), categories, ordered=ordered

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:584: OldFormatWarning: Element '/obs/__categories/DonorGender' was written without encoding metadata.

categories = read_elem(categories_dset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:587: OldFormatWarning: Element '/obs/DonorGender' was written without encoding metadata.

read_elem(dataset), categories, ordered=ordered

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:584: OldFormatWarning: Element '/obs/__categories/QCMeds' was written without encoding metadata.

categories = read_elem(categories_dset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:587: OldFormatWarning: Element '/obs/QCMeds' was written without encoding metadata.

read_elem(dataset), categories, ordered=ordered

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:584: OldFormatWarning: Element '/obs/__categories/DonorSmoker' was written without encoding metadata.

categories = read_elem(categories_dset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:587: OldFormatWarning: Element '/obs/DonorSmoker' was written without encoding metadata.

read_elem(dataset), categories, ordered=ordered

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:590: OldFormatWarning: Element '/obs/_index' was written without encoding metadata.

return read_elem(dataset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/h5ad.py:238: OldFormatWarning: Element '/obsm' was written without encoding metadata.

d[k] = read_elem(f[k])

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:92: OldFormatWarning: Element '/obsm/ATAC_lsi_full' was written without encoding metadata.

return {k: read_elem(v) for k, v in elem.items()}

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:92: OldFormatWarning: Element '/obsm/ATAC_lsi_red' was written without encoding metadata.

return {k: read_elem(v) for k, v in elem.items()}

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:92: OldFormatWarning: Element '/obsm/ATAC_umap' was written without encoding metadata.

return {k: read_elem(v) for k, v in elem.items()}

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:92: OldFormatWarning: Element '/obsm/GEX_X_pca' was written without encoding metadata.

return {k: read_elem(v) for k, v in elem.items()}

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:92: OldFormatWarning: Element '/obsm/GEX_X_umap' was written without encoding metadata.

return {k: read_elem(v) for k, v in elem.items()}

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/h5ad.py:238: OldFormatWarning: Element '/uns' was written without encoding metadata.

d[k] = read_elem(f[k])

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:92: OldFormatWarning: Element '/uns/ATAC_gene_activity_var_names' was written without encoding metadata.

return {k: read_elem(v) for k, v in elem.items()}

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:92: OldFormatWarning: Element '/uns/dataset_id' was written without encoding metadata.

return {k: read_elem(v) for k, v in elem.items()}

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:92: OldFormatWarning: Element '/uns/genome' was written without encoding metadata.

return {k: read_elem(v) for k, v in elem.items()}

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:92: OldFormatWarning: Element '/uns/organism' was written without encoding metadata.

return {k: read_elem(v) for k, v in elem.items()}

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:584: OldFormatWarning: Element '/var/__categories/feature_types' was written without encoding metadata.

categories = read_elem(categories_dset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:587: OldFormatWarning: Element '/var/feature_types' was written without encoding metadata.

read_elem(dataset), categories, ordered=ordered

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:584: OldFormatWarning: Element '/var/__categories/gene_id' was written without encoding metadata.

categories = read_elem(categories_dset)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:587: OldFormatWarning: Element '/var/gene_id' was written without encoding metadata.

read_elem(dataset), categories, ordered=ordered

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata/_io/specs/methods.py:590: OldFormatWarning: Element '/var/_index' was written without encoding metadata.

return read_elem(dataset)

AnnData object with n_obs × n_vars = 69249 × 129921

obs: 'GEX_pct_counts_mt', 'GEX_n_counts', 'GEX_n_genes', 'GEX_size_factors', 'GEX_phase', 'ATAC_nCount_peaks', 'ATAC_atac_fragments', 'ATAC_reads_in_peaks_frac', 'ATAC_blacklist_fraction', 'ATAC_nucleosome_signal', 'cell_type', 'batch', 'ATAC_pseudotime_order', 'GEX_pseudotime_order', 'Samplename', 'Site', 'DonorNumber', 'Modality', 'VendorLot', 'DonorID', 'DonorAge', 'DonorBMI', 'DonorBloodType', 'DonorRace', 'Ethnicity', 'DonorGender', 'QCMeds', 'DonorSmoker'

var: 'feature_types', 'gene_id'

uns: 'ATAC_gene_activity_var_names', 'dataset_id', 'genome', 'organism'

obsm: 'ATAC_gene_activity', 'ATAC_lsi_full', 'ATAC_lsi_red', 'ATAC_umap', 'GEX_X_pca', 'GEX_X_umap'

layers: 'counts', 'logcounts'

The full dataset contains 69,249 cells and measurements for 129,921 features. There are two versions of the expression matrix, counts which contains the raw count values and logcounts which contains normalised log counts (these values are also stored in adata.X).

The obs slot contains several variables, some of which were calculated during pre-processing (for quality control) and others that contain metadata about the samples. The ones we are interested in here are:

cell_type- The annotated label for each cellbatch- The sequencing batch for each cell

For a real analysis it would be important to consider more variables but to keep it simple here we will only look at these.

We define variables to hold these names so that it is clear how we are using them in the code. This also helps with reproducibility because if we decided to change one of them for whatever reason we can be sure it has changed in the whole notebook.

label_key = "cell_type"

batch_key = "batch"

What to use as the batch label?

As mentioned above, deciding what to use as a “batch” for data integration is one of the central choices when integrating your data. The most common approach is to define each sample as a batch (as we have here) which generally produces the strongest batch correction. However, samples are usually confounded with biological factors that you may want to preserve. For example, imagine an experiment that took samples from two locations in a tissue. If samples are considered as batches then data integration methods will attempt to remove differences between them and therefore differences between the locations. In this case, it may be more appropriate to use the donor as the batch to remove differences between individuals but not between locations. The planned analysis should also be considered. In cases where you are integrating many datasets and want to capture differences between individuals, the dataset may be a useful batch covariate. In our example, it may be better to have consistent cell type labels for the two locations and then test for differences between them than to have separate clusters for each location which need to be annotated separately and then matched.

The issue of confounding between samples and biological factors can be mitigated through careful experimental design that minimizes the batch effect overall by using multiplexing techniques that allow biological samples to be combined into a single sequencing sample. However, this is not always possible and requires both extra processing in the lab and as well as extra computational steps.

Let’s have a look at the different batches and how many cells we have for each.

adata_raw.obs[batch_key].value_counts()

s4d8 9876

s4d1 8023

s3d10 6781

s1d2 6740

s1d1 6224

s2d4 6111

s2d5 4895

s3d3 4325

s4d9 4325

s1d3 4279

s2d1 4220

s3d7 1771

s3d6 1679

Name: batch, dtype: int64

There are 13 different batches in the dataset. During this experiment, multiple samples were taken from a set of donors and sequenced at different facilities so the names here are a combination of the sample number (eg. “s1”) and the donor (eg. “d2”). For simplicity, and to reduce computational time, we will select three samples to use.

keep_batches = ["s1d3", "s2d1", "s3d7"]

adata = adata_raw[adata_raw.obs[batch_key].isin(keep_batches)].copy()

adata

AnnData object with n_obs × n_vars = 10270 × 129921

obs: 'GEX_pct_counts_mt', 'GEX_n_counts', 'GEX_n_genes', 'GEX_size_factors', 'GEX_phase', 'ATAC_nCount_peaks', 'ATAC_atac_fragments', 'ATAC_reads_in_peaks_frac', 'ATAC_blacklist_fraction', 'ATAC_nucleosome_signal', 'cell_type', 'batch', 'ATAC_pseudotime_order', 'GEX_pseudotime_order', 'Samplename', 'Site', 'DonorNumber', 'Modality', 'VendorLot', 'DonorID', 'DonorAge', 'DonorBMI', 'DonorBloodType', 'DonorRace', 'Ethnicity', 'DonorGender', 'QCMeds', 'DonorSmoker'

var: 'feature_types', 'gene_id'

uns: 'ATAC_gene_activity_var_names', 'dataset_id', 'genome', 'organism'

obsm: 'ATAC_gene_activity', 'ATAC_lsi_full', 'ATAC_lsi_red', 'ATAC_umap', 'GEX_X_pca', 'GEX_X_umap'

layers: 'counts', 'logcounts'

After subsetting to select these batches we are left with 10,270 cells.

We have two annotations for the features stored in var:

feature_types- The type of each feature (RNA or ATAC)gene_id- The gene associated with each feature

Let’s have a look at the feature types.

adata.var["feature_types"].value_counts()

ATAC 116490

GEX 13431

Name: feature_types, dtype: int64

We can see that there are over 100,000 ATAC features but only around 13,000 gene expression (“GEX”) features. Integration of multiple modalities is a complex problem that will be described in the multimodal integration chapter, so for now we will subset to only the gene expression features. We also perform simple filtering to make sure we have no features with zero counts (this is necessary because by selecting a subset of samples we may have removed all the cells which expressed a particular feature).

adata = adata[:, adata.var["feature_types"] == "GEX"].copy()

sc.pp.filter_genes(adata, min_cells=1)

adata

AnnData object with n_obs × n_vars = 10270 × 13431

obs: 'GEX_pct_counts_mt', 'GEX_n_counts', 'GEX_n_genes', 'GEX_size_factors', 'GEX_phase', 'ATAC_nCount_peaks', 'ATAC_atac_fragments', 'ATAC_reads_in_peaks_frac', 'ATAC_blacklist_fraction', 'ATAC_nucleosome_signal', 'cell_type', 'batch', 'ATAC_pseudotime_order', 'GEX_pseudotime_order', 'Samplename', 'Site', 'DonorNumber', 'Modality', 'VendorLot', 'DonorID', 'DonorAge', 'DonorBMI', 'DonorBloodType', 'DonorRace', 'Ethnicity', 'DonorGender', 'QCMeds', 'DonorSmoker'

var: 'feature_types', 'gene_id', 'n_cells'

uns: 'ATAC_gene_activity_var_names', 'dataset_id', 'genome', 'organism'

obsm: 'ATAC_gene_activity', 'ATAC_lsi_full', 'ATAC_lsi_red', 'ATAC_umap', 'GEX_X_pca', 'GEX_X_umap'

layers: 'counts', 'logcounts'

Because of the subsetting we also need to re-normalise the data. Here we just normalise using global scaling by the total counts per cell.

adata.X = adata.layers["counts"].copy()

sc.pp.normalize_total(adata)

sc.pp.log1p(adata)

adata.layers["logcounts"] = adata.X.copy()

We will use this dataset to demonstrate integration.

Most integration methods require a single object containing all the samples and a batch variable (like we have here). If instead, you have separate objects for each of your samples you can join them using the anndata concat() function. See the concatenation tutorial for more details. Similar functionality exists in other ecosystems.

12.3. Unintegrated data#

It is always recommended to look at the raw data before performing any integration. This can give some indication of how big any batch effects are and what might be causing them (and therefore which variables to consider as the batch label). For some experiments, it might even suggest that integration is not required if samples already overlap. This is not uncommon for mouse or cell line studies from a single lab for example, where most of the variables which contribute to batch effects can be controlled (i.e. the batch correction setting).

We will perform highly variable gene (HVG) selection, PCA and UMAP dimensionality reduction as we have seen in previous chapters.

sc.pp.highly_variable_genes(adata)

sc.tl.pca(adata)

sc.pp.neighbors(adata)

sc.tl.umap(adata)

adata

AnnData object with n_obs × n_vars = 10270 × 13431

obs: 'GEX_pct_counts_mt', 'GEX_n_counts', 'GEX_n_genes', 'GEX_size_factors', 'GEX_phase', 'ATAC_nCount_peaks', 'ATAC_atac_fragments', 'ATAC_reads_in_peaks_frac', 'ATAC_blacklist_fraction', 'ATAC_nucleosome_signal', 'cell_type', 'batch', 'ATAC_pseudotime_order', 'GEX_pseudotime_order', 'Samplename', 'Site', 'DonorNumber', 'Modality', 'VendorLot', 'DonorID', 'DonorAge', 'DonorBMI', 'DonorBloodType', 'DonorRace', 'Ethnicity', 'DonorGender', 'QCMeds', 'DonorSmoker'

var: 'feature_types', 'gene_id', 'n_cells', 'highly_variable', 'means', 'dispersions', 'dispersions_norm'

uns: 'ATAC_gene_activity_var_names', 'dataset_id', 'genome', 'organism', 'log1p', 'hvg', 'pca', 'neighbors', 'umap'

obsm: 'ATAC_gene_activity', 'ATAC_lsi_full', 'ATAC_lsi_red', 'ATAC_umap', 'GEX_X_pca', 'GEX_X_umap', 'X_pca', 'X_umap'

varm: 'PCs'

layers: 'counts', 'logcounts'

obsp: 'distances', 'connectivities'

This adds several new items to our AnnData object. The var slot now includes means, dispersions and the selected variable genes. In the obsp slot we have distances and connectivities for our KNN graph and in obsm are the PCA and UMAP embeddings.

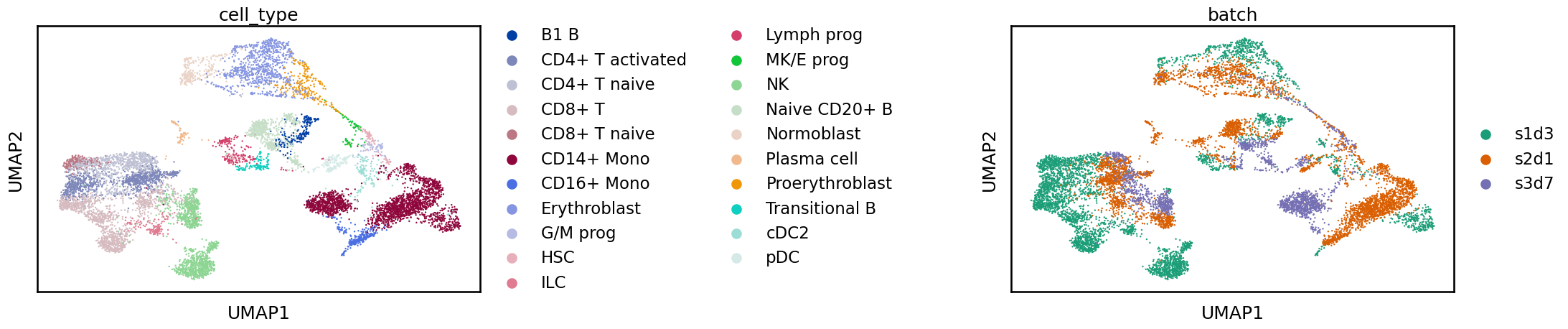

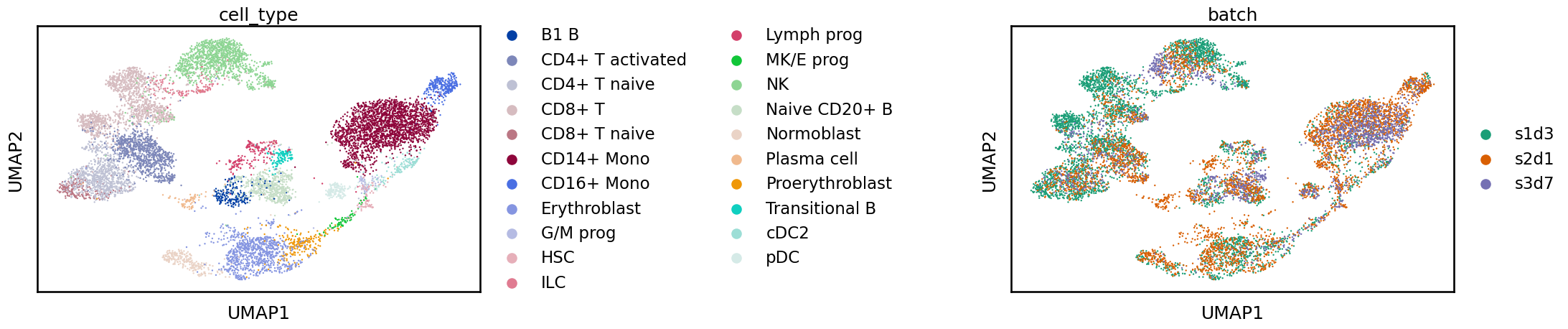

Let’s plot the UMAP, colouring the points by cell identity and batch labels. If the dataset had not already been labelled (which is often the case) we would only be able to consider the batch labels.

adata.uns[batch_key + "_colors"] = [

"#1b9e77",

"#d95f02",

"#7570b3",

] # Set custom colours for batches

sc.pl.umap(adata, color=[label_key, batch_key], wspace=1)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/scanpy/plotting/_tools/scatterplots.py:163: PendingDeprecationWarning: The get_cmap function will be deprecated in a future version. Use ``matplotlib.colormaps[name]`` or ``matplotlib.colormaps.get_cmap(obj)`` instead.

cmap = copy(get_cmap(cmap))

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/scanpy/plotting/_tools/scatterplots.py:392: UserWarning: No data for colormapping provided via 'c'. Parameters 'cmap' will be ignored

cax = scatter(

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/scanpy/plotting/_tools/scatterplots.py:392: UserWarning: No data for colormapping provided via 'c'. Parameters 'cmap' will be ignored

cax = scatter(

Often when looking at these plots you will see a clear separation between batches. In this case, what we see is more subtle and while cells from the same label are generally near each other there is a shift between batches. If we were to perform a clustering analysis using this raw data we would probably end up with some clusters containing a single batch which would be difficult to interpret at the annotation stage. We are also likely to overlook rare cell types which are not common enough in any single sample to produce their own cluster. While UMAPs can often display batch effects, as always when considering these 2D representations it is important not to overinterpret them. For a real analysis, you should confirm the integration in other ways such as by inspecting the distribution of marker genes. In the “Benchmarking your own integration” section below we discuss metrics for quantifying the quality of an integration.

Now that we have confirmed there are batch effects to correct we can move on to the different integration methods. If the batches perfectly overlaid each other or we could discover meaningful cell clusters without correction then there would be no need to perform integration.

12.4. Batch-aware feature selection#

As shown in previous chapters we often select a subset of genes to use for our analysis in order to reduce noise and processing time. We do the same thing when we have multiple samples, however, it is important that gene selection is performed in a batch-aware way. This is because genes that are variable across the whole dataset could be capturing batch effects rather than the biological signals we are interested in. It also helps to select genes relevant to rare cell identities, for example, if an identity is only present in one sample then markers for it may not be variable across all the samples but should be in that one sample.

We can perform batch-aware highly variable gene selection by setting the batch_key argument in the scanpy highly_variable_genes() function. scanpy will then calculate HVGs for each batch separately and combine the results by selecting those genes that are highly variable in the highest number of batches. We use the scanpy function here because it has this batch awareness built in. For other methods, we would have to run them on each batch individually and then manually combine the results.

sc.pp.highly_variable_genes(

adata, n_top_genes=2000, flavor="cell_ranger", batch_key=batch_key

)

adata.var

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/scanpy/preprocessing/_highly_variable_genes.py:478: FutureWarning: The frame.append method is deprecated and will be removed from pandas in a future version. Use pandas.concat instead.

hvg = hvg.append(missing_hvg, ignore_index=True)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/scanpy/preprocessing/_highly_variable_genes.py:478: FutureWarning: The frame.append method is deprecated and will be removed from pandas in a future version. Use pandas.concat instead.

hvg = hvg.append(missing_hvg, ignore_index=True)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/scanpy/preprocessing/_highly_variable_genes.py:478: FutureWarning: The frame.append method is deprecated and will be removed from pandas in a future version. Use pandas.concat instead.

hvg = hvg.append(missing_hvg, ignore_index=True)

| feature_types | gene_id | n_cells | highly_variable | means | dispersions | dispersions_norm | highly_variable_nbatches | highly_variable_intersection | |

|---|---|---|---|---|---|---|---|---|---|

| AL627309.5 | GEX | ENSG00000241860 | 112 | False | 0.006533 | 0.775289 | 0.357973 | 1 | False |

| LINC01409 | GEX | ENSG00000237491 | 422 | False | 0.024462 | 0.716935 | -0.126119 | 0 | False |

| LINC01128 | GEX | ENSG00000228794 | 569 | False | 0.030714 | 0.709340 | -0.296701 | 0 | False |

| NOC2L | GEX | ENSG00000188976 | 675 | False | 0.037059 | 0.704363 | -0.494025 | 0 | False |

| KLHL17 | GEX | ENSG00000187961 | 88 | False | 0.005295 | 0.721757 | -0.028456 | 0 | False |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| MT-ND5 | GEX | ENSG00000198786 | 4056 | False | 0.269375 | 0.645837 | -0.457491 | 0 | False |

| MT-ND6 | GEX | ENSG00000198695 | 1102 | False | 0.051506 | 0.697710 | -0.248421 | 0 | False |

| MT-CYB | GEX | ENSG00000198727 | 5647 | False | 0.520368 | 0.613233 | -0.441362 | 0 | False |

| AL592183.1 | GEX | ENSG00000273748 | 732 | False | 0.047486 | 0.753417 | 0.413699 | 0 | False |

| AC240274.1 | GEX | ENSG00000271254 | 43 | False | 0.001871 | 0.413772 | -0.386635 | 0 | False |

13431 rows × 9 columns

We can see there are now some additional columns in var:

highly_variable_nbatches- The number of batches where each gene was found to be highly variablehighly_variable_intersection- Whether each gene was highly variable in every batchhighly_variable- Whether each gene was selected as highly variable after combining the results from each batch

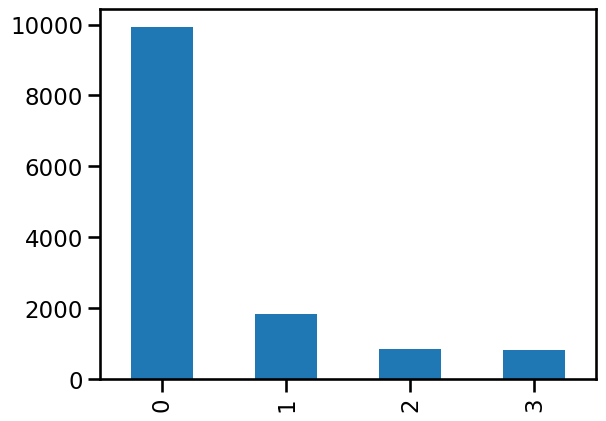

Let’s check how many batches each gene was variable in:

n_batches = adata.var["highly_variable_nbatches"].value_counts()

ax = n_batches.plot(kind="bar")

n_batches

0 9931

1 1824

2 852

3 824

Name: highly_variable_nbatches, dtype: int64

The first thing we notice is that most genes are not highly variable. This is typically the case but it can depend on how different the samples we are trying to integrate are. The overlap then decreases as we add more samples, with relatively few genes being highly variable in all three batches. By selecting the top 2000 genes we have selected all HVGs that are present in two or three batches and most of those that are present in one batch.

How many genes to use?

This is a question that doesn’t have a clear answer. The authors of the scvi-tools package which we use below recommend between 1000 and 10000 genes but how many depends on the context including the complexity of the dataset and the number of batches. A survey from a previous best practices paper [Luecken and Theis, 2019] indicated people typically use between 1000 and 6000 HVGs in a standard analysis. While selecting fewer genes can aid in the removal of batch effects [Luecken et al., 2021] (the most highly-variable genes often describe only dominant biological variation), we recommend selecting slightly too many genes rather than selecting too few and risk removing genes that are important for a rare cell type or a pathway of interest. It should however be noted that more genes will also increase the time required to run the integration methods.

We will create an object with just the selected genes to use for integration.

adata_hvg = adata[:, adata.var["highly_variable"]].copy()

adata_hvg

AnnData object with n_obs × n_vars = 10270 × 2000

obs: 'GEX_pct_counts_mt', 'GEX_n_counts', 'GEX_n_genes', 'GEX_size_factors', 'GEX_phase', 'ATAC_nCount_peaks', 'ATAC_atac_fragments', 'ATAC_reads_in_peaks_frac', 'ATAC_blacklist_fraction', 'ATAC_nucleosome_signal', 'cell_type', 'batch', 'ATAC_pseudotime_order', 'GEX_pseudotime_order', 'Samplename', 'Site', 'DonorNumber', 'Modality', 'VendorLot', 'DonorID', 'DonorAge', 'DonorBMI', 'DonorBloodType', 'DonorRace', 'Ethnicity', 'DonorGender', 'QCMeds', 'DonorSmoker'

var: 'feature_types', 'gene_id', 'n_cells', 'highly_variable', 'means', 'dispersions', 'dispersions_norm', 'highly_variable_nbatches', 'highly_variable_intersection'

uns: 'ATAC_gene_activity_var_names', 'dataset_id', 'genome', 'organism', 'log1p', 'hvg', 'pca', 'neighbors', 'umap', 'batch_colors', 'cell_type_colors'

obsm: 'ATAC_gene_activity', 'ATAC_lsi_full', 'ATAC_lsi_red', 'ATAC_umap', 'GEX_X_pca', 'GEX_X_umap', 'X_pca', 'X_umap'

varm: 'PCs'

layers: 'counts', 'logcounts'

obsp: 'distances', 'connectivities'

12.5. Variational autoencoder (VAE) based integration#

The first integration method we will use is scVI (single-cell Variational Inference), a method based on a conditional variational autoencoder [Lopez et al., 2018] available in the scvi-tools package [Gayoso et al., 2022]. A variational autoencoder is a type of artificial neural network which attempts to reduce the dimensionality of a dataset. The conditional part refers to conditioning this dimensionality reduction process on a particular covariate (in this case batches) such that the covariate does not affect the low-dimensional representation. In benchmarking studies scVI has been shown to perform well across a range of datasets with a good balance of batch correction while conserving biological variability [Luecken et al., 2021]. scVI models raw counts directly, so it is important that we provide it with a count matrix rather than a normalized expression matrix.

First, let’s make a copy of our dataset to use for this integration. Normally it is not necessary to do this but as we will demonstrate multiple integration methods making a copy makes it easier to show what has been added by each method.

adata_scvi = adata_hvg.copy()

12.5.1. Data preparation#

The first step in using scVI is to prepare our AnnData object. This step stores some information required by scVI such as which expression matrix to use and what the batch key is.

scvi.model.SCVI.setup_anndata(adata_scvi, layer="counts", batch_key=batch_key)

adata_scvi

AnnData object with n_obs × n_vars = 10270 × 2000

obs: 'GEX_pct_counts_mt', 'GEX_n_counts', 'GEX_n_genes', 'GEX_size_factors', 'GEX_phase', 'ATAC_nCount_peaks', 'ATAC_atac_fragments', 'ATAC_reads_in_peaks_frac', 'ATAC_blacklist_fraction', 'ATAC_nucleosome_signal', 'cell_type', 'batch', 'ATAC_pseudotime_order', 'GEX_pseudotime_order', 'Samplename', 'Site', 'DonorNumber', 'Modality', 'VendorLot', 'DonorID', 'DonorAge', 'DonorBMI', 'DonorBloodType', 'DonorRace', 'Ethnicity', 'DonorGender', 'QCMeds', 'DonorSmoker', '_scvi_batch', '_scvi_labels'

var: 'feature_types', 'gene_id', 'n_cells', 'highly_variable', 'means', 'dispersions', 'dispersions_norm', 'highly_variable_nbatches', 'highly_variable_intersection'

uns: 'ATAC_gene_activity_var_names', 'dataset_id', 'genome', 'organism', 'log1p', 'hvg', 'pca', 'neighbors', 'umap', 'batch_colors', 'cell_type_colors', '_scvi_uuid', '_scvi_manager_uuid'

obsm: 'ATAC_gene_activity', 'ATAC_lsi_full', 'ATAC_lsi_red', 'ATAC_umap', 'GEX_X_pca', 'GEX_X_umap', 'X_pca', 'X_umap'

varm: 'PCs'

layers: 'counts', 'logcounts'

obsp: 'distances', 'connectivities'

The fields created by scVI are prefixed with _scvi. These are designed for internal use and should not be manually modified. The general advice from the scvi-tools authors is to not make any changes to our object until after the model is trained. On other datasets, you may see a warning about the input expression matrix containing unnormalised count data. This usually means you should check that the layer provided to the setup function does actually contain count values but it can also happen if you have values from performing gene length correction on data from a full-length protocol or from another quantification method that does not produce integer counts.

12.5.2. Building the model#

We can now construct an scVI model object. As well as the scVI model we use here, the scvi-tools package contains various other models (we will use the scANVI model below).

model_scvi = scvi.model.SCVI(adata_scvi)

model_scvi

SCVI Model with the following params: n_hidden: 128, n_latent: 10, n_layers: 1, dropout_rate: 0.1, dispersion: gene, gene_likelihood: zinb, latent_distribution: normal Training status: Not Trained

The scVI model object contains the provided AnnData object as well as the neural network for the model itself. You can see that currently the model is not trained. If we wanted to modify the structure of the network we could provide additional arguments to the model construction function but here we just use the defaults.

We can also print a more detailed description of the model that shows us where things are stored in the associated AnnData object.

model_scvi.view_anndata_setup()

Anndata setup with scvi-tools version 0.18.0.

Setup via `SCVI.setup_anndata` with arguments:

{ │ 'layer': 'counts', │ 'batch_key': 'batch', │ 'labels_key': None, │ 'size_factor_key': None, │ 'categorical_covariate_keys': None, │ 'continuous_covariate_keys': None }

Summary Statistics ┏━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━┓ ┃ Summary Stat Key ┃ Value ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━┩ │ n_batch │ 3 │ │ n_cells │ 10270 │ │ n_extra_categorical_covs │ 0 │ │ n_extra_continuous_covs │ 0 │ │ n_labels │ 1 │ │ n_vars │ 2000 │ └──────────────────────────┴───────┘

Data Registry ┏━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━━━━┓ ┃ Registry Key ┃ scvi-tools Location ┃ ┡━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━━━━┩ │ X │ adata.layers['counts'] │ │ batch │ adata.obs['_scvi_batch'] │ │ labels │ adata.obs['_scvi_labels'] │ └──────────────┴───────────────────────────┘

batch State Registry ┏━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━┓ ┃ Source Location ┃ Categories ┃ scvi-tools Encoding ┃ ┡━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━┩ │ adata.obs['batch'] │ s1d3 │ 0 │ │ │ s2d1 │ 1 │ │ │ s3d7 │ 2 │ └────────────────────┴────────────┴─────────────────────┘

labels State Registry ┏━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━┓ ┃ Source Location ┃ Categories ┃ scvi-tools Encoding ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━┩ │ adata.obs['_scvi_labels'] │ 0 │ 0 │ └───────────────────────────┴────────────┴─────────────────────┘

Here we can see exactly what information has been assigned by scVI, including details like how each different batch is encoded in the model.

12.5.3. Training the model#

The model will be trained for a given number of epochs, a training iteration where every cell is passed through the network. By default scVI uses the following heuristic to set the number of epochs. For datasets with fewer than 20,000 cells, 400 epochs will be used and as the number of cells grows above 20,000 the number of epochs is continuously reduced. The reasoning behind this is that as the network sees more cells during each epoch it can learn the same amount of information as it would from more epochs with fewer cells.

max_epochs_scvi = np.min([round((20000 / adata.n_obs) * 400), 400])

max_epochs_scvi

400

We now train the model for the selected number of epochs (this will take ~20-40 minutes depending on the computer you are using).

model_scvi.train()

GPU available: False, used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

Epoch 400/400: 100%|██████████| 400/400 [15:49<00:00, 2.27s/it, loss=648, v_num=1]

`Trainer.fit` stopped: `max_epochs=400` reached.

Epoch 400/400: 100%|██████████| 400/400 [15:49<00:00, 2.37s/it, loss=648, v_num=1]

Early stopping

Additionally to setting a target number of epochs, it is possible to also set early_stopping=True in the training function. This will let scVI decide to stop training early depending on the convergence of the model. The exact conditions for stopping can be controlled by other parameters.

12.5.4. Extracting the embedding#

The main result we want to extract from the trained model is the latent representation for each cell. This is a multi-dimensional embedding where the batch effects have been removed that can be used in a similar way to how we use PCA dimensions when analysing a single dataset. We store this in obsm with the key X_scvi.

adata_scvi.obsm["X_scVI"] = model_scvi.get_latent_representation()

12.5.5. Calculate a batch-corrected UMAP#

We will now visualise the data as we did before integration. We calculate a new UMAP embedding but instead of finding nearest neighbors in PCA space, we start with the corrected representation from scVI.

sc.pp.neighbors(adata_scvi, use_rep="X_scVI")

sc.tl.umap(adata_scvi)

adata_scvi

AnnData object with n_obs × n_vars = 10270 × 2000

obs: 'GEX_pct_counts_mt', 'GEX_n_counts', 'GEX_n_genes', 'GEX_size_factors', 'GEX_phase', 'ATAC_nCount_peaks', 'ATAC_atac_fragments', 'ATAC_reads_in_peaks_frac', 'ATAC_blacklist_fraction', 'ATAC_nucleosome_signal', 'cell_type', 'batch', 'ATAC_pseudotime_order', 'GEX_pseudotime_order', 'Samplename', 'Site', 'DonorNumber', 'Modality', 'VendorLot', 'DonorID', 'DonorAge', 'DonorBMI', 'DonorBloodType', 'DonorRace', 'Ethnicity', 'DonorGender', 'QCMeds', 'DonorSmoker', '_scvi_batch', '_scvi_labels'

var: 'feature_types', 'gene_id', 'n_cells', 'highly_variable', 'means', 'dispersions', 'dispersions_norm', 'highly_variable_nbatches', 'highly_variable_intersection'

uns: 'ATAC_gene_activity_var_names', 'dataset_id', 'genome', 'organism', 'log1p', 'hvg', 'pca', 'neighbors', 'umap', 'batch_colors', 'cell_type_colors', '_scvi_uuid', '_scvi_manager_uuid'

obsm: 'ATAC_gene_activity', 'ATAC_lsi_full', 'ATAC_lsi_red', 'ATAC_umap', 'GEX_X_pca', 'GEX_X_umap', 'X_pca', 'X_umap', 'X_scVI'

varm: 'PCs'

layers: 'counts', 'logcounts'

obsp: 'distances', 'connectivities'

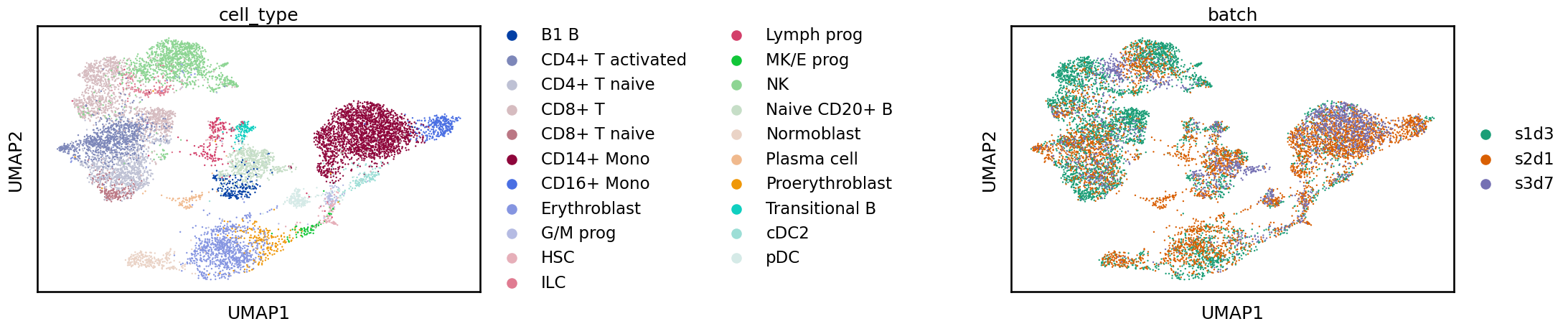

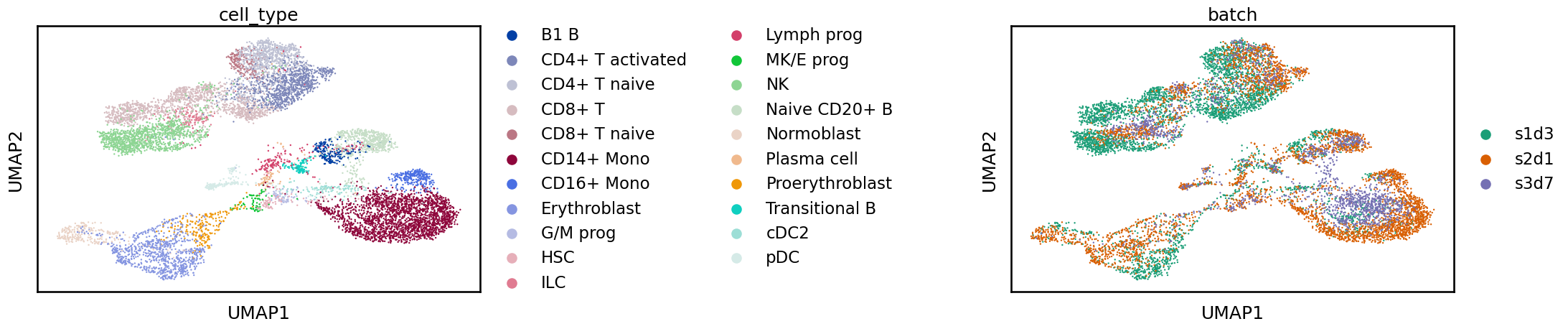

Once we have the new UMAP representation we can plot it coloured by batch and identity labels as before.

sc.pl.umap(adata_scvi, color=[label_key, batch_key], wspace=1)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/scanpy/plotting/_tools/scatterplots.py:163: PendingDeprecationWarning: The get_cmap function will be deprecated in a future version. Use ``matplotlib.colormaps[name]`` or ``matplotlib.colormaps.get_cmap(obj)`` instead.

cmap = copy(get_cmap(cmap))

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/scanpy/plotting/_tools/scatterplots.py:392: UserWarning: No data for colormapping provided via 'c'. Parameters 'cmap' will be ignored

cax = scatter(

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/scanpy/plotting/_tools/scatterplots.py:392: UserWarning: No data for colormapping provided via 'c'. Parameters 'cmap' will be ignored

cax = scatter(

This looks better! Before, the various batches were shifted apart from each other. Now, the batches overlap more and we have a single blob for each cell identity label.

In many cases, we would not already have identity labels so from this stage we would continue with clustering, annotation and further analysis as described in other chapters.

12.6. VAE integration using cell labels#

When performing integration with scVI we pretended that we didn’t already have any cell labels (although we showed them in plots). While this scenario is common there are some cases where we do know something about cell identity in advance. Most often this is when we want to combine one or more publicly available datasets with data from a new study. When we have labels for at least some of the cells we can use scANVI (single-cell ANnotation using Variational Inference) [Xu et al., 2021]. This is an extension of the scVI model that can incorporate cell identity label information as well as batch information. Because it has this extra information it can try to keep the differences between cell labels while removing batch effects. Benchmarking suggests that scANVI tends to better preserve biological signals compared to scVI but sometimes it is not as effective at removing batch effects [Luecken et al., 2021]. While we have labels for all cells here it is also possible to use scANVI in a semi-supervised manner where labels are only provided for some cells.

Label harmonization

If you are using scANVI to integrate multiple datasets for which you already have labels it is important to first perform label harmonization. This refers to a process of checking that labels are consistent across the datasets that are being integrated. For example, a cell may be annotated as a “T cell” in one dataset, but a cell of the same type could have been given the label “CD8+ T cell” in another dataset. How best to harmonize labels is an open question but often requires input from subject-matter experts.

We start by creating a scANVI model object. Note that because scANVI refines an already trained scVI model, we provide the scVI model rather than an AnnData object. If we had not already trained an scVI model we would need to do that first. We also provide a key for the column of adata.obs which contains our cell labels as well as the label which corresponds to unlabelled cells. In this case all of our cells are labelled so we just provide a dummy value. In most cases, it is important to check that this is set correctly so that scANVI knows which label to ignore during training.

# Normally we would need to run scVI first but we have already done that here

# model_scvi = scvi.model.SCVI(adata_scvi) etc.

model_scanvi = scvi.model.SCANVI.from_scvi_model(

model_scvi, labels_key=label_key, unlabeled_category="unlabelled"

)

print(model_scanvi)

model_scanvi.view_anndata_setup()

ScanVI Model with the following params: unlabeled_category: unlabelled, n_hidden: 128, n_latent: 10, n_layers: 1, dropout_rate: 0.1, dispersion: gene, gene_likelihood: zinb Training status: Not Trained

Anndata setup with scvi-tools version 0.18.0.

Setup via `SCANVI.setup_anndata` with arguments:

{ │ 'labels_key': 'cell_type', │ 'unlabeled_category': 'unlabelled', │ 'layer': 'counts', │ 'batch_key': 'batch', │ 'size_factor_key': None, │ 'categorical_covariate_keys': None, │ 'continuous_covariate_keys': None }

Summary Statistics ┏━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━┓ ┃ Summary Stat Key ┃ Value ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━┩ │ n_batch │ 3 │ │ n_cells │ 10270 │ │ n_extra_categorical_covs │ 0 │ │ n_extra_continuous_covs │ 0 │ │ n_labels │ 22 │ │ n_vars │ 2000 │ └──────────────────────────┴───────┘

Data Registry ┏━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━━━━┓ ┃ Registry Key ┃ scvi-tools Location ┃ ┡━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━━━━┩ │ X │ adata.layers['counts'] │ │ batch │ adata.obs['_scvi_batch'] │ │ labels │ adata.obs['_scvi_labels'] │ └──────────────┴───────────────────────────┘

batch State Registry ┏━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━┓ ┃ Source Location ┃ Categories ┃ scvi-tools Encoding ┃ ┡━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━┩ │ adata.obs['batch'] │ s1d3 │ 0 │ │ │ s2d1 │ 1 │ │ │ s3d7 │ 2 │ └────────────────────┴────────────┴─────────────────────┘

labels State Registry ┏━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━┓ ┃ Source Location ┃ Categories ┃ scvi-tools Encoding ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━┩ │ adata.obs['cell_type'] │ B1 B │ 0 │ │ │ CD4+ T activated │ 1 │ │ │ CD4+ T naive │ 2 │ │ │ CD8+ T │ 3 │ │ │ CD8+ T naive │ 4 │ │ │ CD14+ Mono │ 5 │ │ │ CD16+ Mono │ 6 │ │ │ Erythroblast │ 7 │ │ │ G/M prog │ 8 │ │ │ HSC │ 9 │ │ │ ILC │ 10 │ │ │ Lymph prog │ 11 │ │ │ MK/E prog │ 12 │ │ │ NK │ 13 │ │ │ Naive CD20+ B │ 14 │ │ │ Normoblast │ 15 │ │ │ Plasma cell │ 16 │ │ │ Proerythroblast │ 17 │ │ │ Transitional B │ 18 │ │ │ cDC2 │ 19 │ │ │ pDC │ 20 │ │ │ unlabelled │ 21 │ └────────────────────────┴──────────────────┴─────────────────────┘

This scANVI model object is very similar to what we saw before for scVI. As mentioned previously, we could modify the structure of the model network but here we just use the default parameters.

Again, we have a heuristic for selecting the number of training epochs. Note that this is much fewer than before as we are just refining the scVI model, rather than training a whole network from scratch.

max_epochs_scanvi = int(np.min([10, np.max([2, round(max_epochs_scvi / 3.0)])]))

model_scanvi.train(max_epochs=max_epochs_scanvi)

INFO Training for 10 epochs.

GPU available: False, used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

Epoch 10/10: 100%|██████████| 10/10 [00:35<00:00, 3.69s/it, loss=747, v_num=1]

`Trainer.fit` stopped: `max_epochs=10` reached.

Epoch 10/10: 100%|██████████| 10/10 [00:35<00:00, 3.56s/it, loss=747, v_num=1]

We can extract the new latent representation from the model and create a new UMAP embedding as we did for scVI.

adata_scanvi = adata_scvi.copy()

adata_scanvi.obsm["X_scANVI"] = model_scanvi.get_latent_representation()

sc.pp.neighbors(adata_scanvi, use_rep="X_scANVI")

sc.tl.umap(adata_scanvi)

sc.pl.umap(adata_scanvi, color=[label_key, batch_key], wspace=1)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/scanpy/plotting/_tools/scatterplots.py:163: PendingDeprecationWarning: The get_cmap function will be deprecated in a future version. Use ``matplotlib.colormaps[name]`` or ``matplotlib.colormaps.get_cmap(obj)`` instead.

cmap = copy(get_cmap(cmap))

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/scanpy/plotting/_tools/scatterplots.py:392: UserWarning: No data for colormapping provided via 'c'. Parameters 'cmap' will be ignored

cax = scatter(

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/scanpy/plotting/_tools/scatterplots.py:392: UserWarning: No data for colormapping provided via 'c'. Parameters 'cmap' will be ignored

cax = scatter(

By looking at the UMAP representation it is difficult to tell the difference between scANVI and scVI but as we will see below there are differences in metric scores when the quality of the integrations is quantified. This is a reminder that we shouldn’t overinterpret these two-dimensional representations, especially when it comes to comparing methods.

12.7. Graph-based integration#

The next method we will look at is BBKNN or “Batch Balanced KNN” [Polański et al., 2019]. This is a very different approach to scVI, which rather than using a neural network to embed cells in a batch corrected space, instead modifies how the k-nearest neighbor (KNN) graph used for clustering and embedding is constructed. As we have seen in previous chapters the normal KNN procedure connects cells to the most similar cells across the whole dataset. The change that BBKNN makes is to enforce that cells are connected to cells from other batches. While this is a simple modification it can be quite effective, particularly when there are very strong batch effects. However, as the output is an integrated graph it can have limited downstream uses as few packages will accept this as an input.

An important parameter for BBKNN is the number of neighbors per batch. A suggested heuristic for this is to use 25 if there are more than 100,000 cells or the default of 3 if there are fewer than 100,000.

neighbors_within_batch = 25 if adata_hvg.n_obs > 100000 else 3

neighbors_within_batch

3

Before using BBKNN we first perform a PCA as we would before building a normal KNN graph. Unlike scVI which models raw counts here, we start with the log-normalised expression matrix.

adata_bbknn = adata_hvg.copy()

adata_bbknn.X = adata_bbknn.layers["logcounts"].copy()

sc.pp.pca(adata_bbknn)

We can now run BBKNN, replacing the call to the scanpy neighbors() function in a standard workflow. An important difference is to make sure the batch_key argument is set which specifies a column in adata_hvg.obs that contains batch labels.

bbknn.bbknn(

adata_bbknn, batch_key=batch_key, neighbors_within_batch=neighbors_within_batch

)

adata_bbknn

AnnData object with n_obs × n_vars = 10270 × 2000

obs: 'GEX_pct_counts_mt', 'GEX_n_counts', 'GEX_n_genes', 'GEX_size_factors', 'GEX_phase', 'ATAC_nCount_peaks', 'ATAC_atac_fragments', 'ATAC_reads_in_peaks_frac', 'ATAC_blacklist_fraction', 'ATAC_nucleosome_signal', 'cell_type', 'batch', 'ATAC_pseudotime_order', 'GEX_pseudotime_order', 'Samplename', 'Site', 'DonorNumber', 'Modality', 'VendorLot', 'DonorID', 'DonorAge', 'DonorBMI', 'DonorBloodType', 'DonorRace', 'Ethnicity', 'DonorGender', 'QCMeds', 'DonorSmoker'

var: 'feature_types', 'gene_id', 'n_cells', 'highly_variable', 'means', 'dispersions', 'dispersions_norm', 'highly_variable_nbatches', 'highly_variable_intersection'

uns: 'ATAC_gene_activity_var_names', 'dataset_id', 'genome', 'organism', 'log1p', 'hvg', 'pca', 'neighbors', 'umap', 'batch_colors', 'cell_type_colors'

obsm: 'ATAC_gene_activity', 'ATAC_lsi_full', 'ATAC_lsi_red', 'ATAC_umap', 'GEX_X_pca', 'GEX_X_umap', 'X_pca', 'X_umap'

varm: 'PCs'

layers: 'counts', 'logcounts'

obsp: 'distances', 'connectivities'

Unlike the default scanpy function, BBKNN does not allow specifying a key for storing results so they are always stored under the default “neighbors” key.

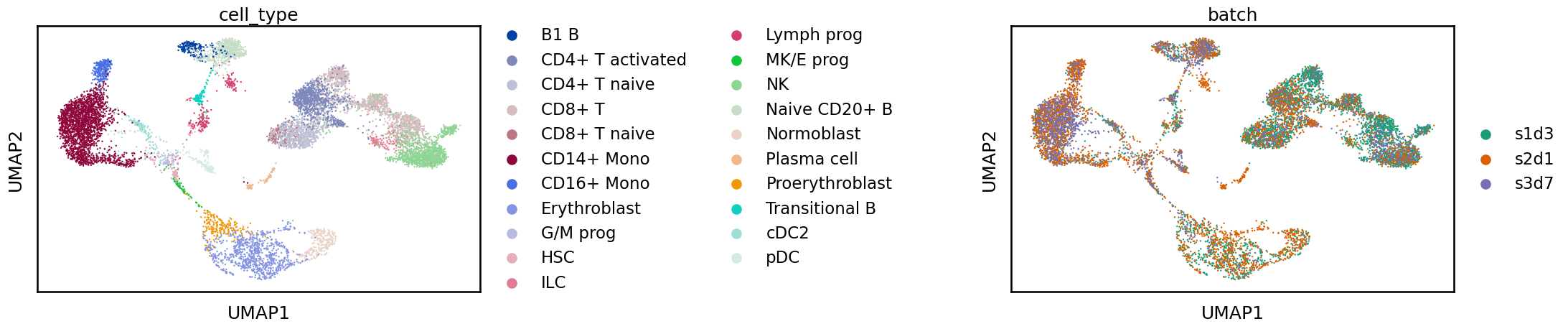

We can use this new integrated graph just like we would a normal KNN graph to construct a UMAP embedding.

sc.tl.umap(adata_bbknn)

sc.pl.umap(adata_bbknn, color=[label_key, batch_key], wspace=1)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/scanpy/plotting/_tools/scatterplots.py:163: PendingDeprecationWarning: The get_cmap function will be deprecated in a future version. Use ``matplotlib.colormaps[name]`` or ``matplotlib.colormaps.get_cmap(obj)`` instead.

cmap = copy(get_cmap(cmap))

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/scanpy/plotting/_tools/scatterplots.py:392: UserWarning: No data for colormapping provided via 'c'. Parameters 'cmap' will be ignored

cax = scatter(

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/scanpy/plotting/_tools/scatterplots.py:392: UserWarning: No data for colormapping provided via 'c'. Parameters 'cmap' will be ignored

cax = scatter(

This integration is also improved compared to the unintegrated data with cell identities grouped together but we still see some shifts between batches.

12.8. Linear embedding integration using Mutual Nearest Neighbors (MNN)#

Some downstream applications cannot accept an integrated embedding or neighborhood graph and require a corrected expression matrix. One approach that can produce this output is the integration method in Seurat [Butler et al., 2018, Satija et al., 2015, Stuart et al., 2019]. The Seurat integration method belongs to a class of linear embedding models that make use of the idea of mutual nearest neighbors (which Seurat calls anchors) to correct batch effects [Haghverdi et al., 2018]. Mutual nearest neighbors are pairs of cells from two different datasets which are in the neighborhood of each other when the datasets are placed in the same (latent) space. After finding these cells they can be used to align the two datasets and correct the differences between them. Seurat has also been found to be one of the top mixing methods in some evaluations [Tran et al., 2020].

As Seurat is an R package we must transfer our data from Python to R. Here we prepare the AnnData to convert so that it can be handled by rpy2 and anndata2ri.

adata_seurat = adata_hvg.copy()

# Convert categorical columns to strings

adata_seurat.obs[batch_key] = adata_seurat.obs[batch_key].astype(str)

adata_seurat.obs[label_key] = adata_seurat.obs[label_key].astype(str)

# Delete uns as this can contain arbitrary objects which are difficult to convert

del adata_seurat.uns

adata_seurat

AnnData object with n_obs × n_vars = 10270 × 2000

obs: 'GEX_pct_counts_mt', 'GEX_n_counts', 'GEX_n_genes', 'GEX_size_factors', 'GEX_phase', 'ATAC_nCount_peaks', 'ATAC_atac_fragments', 'ATAC_reads_in_peaks_frac', 'ATAC_blacklist_fraction', 'ATAC_nucleosome_signal', 'cell_type', 'batch', 'ATAC_pseudotime_order', 'GEX_pseudotime_order', 'Samplename', 'Site', 'DonorNumber', 'Modality', 'VendorLot', 'DonorID', 'DonorAge', 'DonorBMI', 'DonorBloodType', 'DonorRace', 'Ethnicity', 'DonorGender', 'QCMeds', 'DonorSmoker'

var: 'feature_types', 'gene_id', 'n_cells', 'highly_variable', 'means', 'dispersions', 'dispersions_norm', 'highly_variable_nbatches', 'highly_variable_intersection'

obsm: 'ATAC_gene_activity', 'ATAC_lsi_full', 'ATAC_lsi_red', 'ATAC_umap', 'GEX_X_pca', 'GEX_X_umap', 'X_pca', 'X_umap'

varm: 'PCs'

layers: 'counts', 'logcounts'

obsp: 'distances', 'connectivities'

The prepared AnnData is now available in R as a SingleCellExperiment object thanks to anndata2ri. Note that this is transposed compared to an AnnData object so our observations (cells) are now the columns and our variables (genes) are now the rows.

%%R -i adata_seurat

adata_seurat

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/rpy2/robjects/pandas2ri.py:263: DeprecationWarning: The global conversion available with activate() is deprecated and will be removed in the next major release. Use a local converter.

warnings.warn('The global conversion available with activate() '

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/rpy2/robjects/numpy2ri.py:205: DeprecationWarning: The global conversion available with activate() is deprecated and will be removed in the next major release. Use a local converter.

warnings.warn('The global conversion available with activate() '

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/rpy2/robjects/pandas2ri.py:263: DeprecationWarning: The global conversion available with activate() is deprecated and will be removed in the next major release. Use a local converter.

warnings.warn('The global conversion available with activate() '

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/rpy2/robjects/numpy2ri.py:205: DeprecationWarning: The global conversion available with activate() is deprecated and will be removed in the next major release. Use a local converter.

warnings.warn('The global conversion available with activate() '

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata2ri/r2py.py:106: FutureWarning: X.dtype being converted to np.float32 from float64. In the next version of anndata (0.9) conversion will not be automatic. Pass dtype explicitly to avoid this warning. Pass `AnnData(X, dtype=X.dtype, ...)` to get the future behavour.

return AnnData(exprs, obs, var, uns, obsm or None, layers=layers)

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/rpy2/robjects/pandas2ri.py:263: DeprecationWarning: The global conversion available with activate() is deprecated and will be removed in the next major release. Use a local converter.

warnings.warn('The global conversion available with activate() '

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/rpy2/robjects/numpy2ri.py:205: DeprecationWarning: The global conversion available with activate() is deprecated and will be removed in the next major release. Use a local converter.

warnings.warn('The global conversion available with activate() '

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata2ri/r2py.py:106: FutureWarning: X.dtype being converted to np.float32 from float64. In the next version of anndata (0.9) conversion will not be automatic. Pass dtype explicitly to avoid this warning. Pass `AnnData(X, dtype=X.dtype, ...)` to get the future behavour.

return AnnData(exprs, obs, var, uns, obsm or None, layers=layers)

class: SingleCellExperiment

dim: 2000 10270

metadata(0):

assays(3): X counts logcounts

rownames(2000): GPR153 TNFRSF25 ... TMLHE-AS1 MT-ND3

rowData names(9): feature_types gene_id ... highly_variable_nbatches

highly_variable_intersection

colnames(10270): TCACCTGGTTAGGTTG-3-s1d3 CGTTAACAGGTGTCCA-3-s1d3 ...

AGCAGGTAGGCTATGT-12-s3d7 GCCATGATCCCTTGCG-12-s3d7

colData names(28): GEX_pct_counts_mt GEX_n_counts ... QCMeds

DonorSmoker

reducedDimNames(8): ATAC_gene_activity ATAC_lsi_full ... PCA UMAP

mainExpName: NULL

altExpNames(0):

Seurat uses its own object to store data. Helpfully the authors provide a function to convert from SingleCellExperiment. We just provide the SingleCellExperiment object and tell Seurat which assays (layers in our AnnData object) contain raw counts and normalised expression (which Seurat stores in a slot called “data”).

%%R -i adata_seurat

seurat <- as.Seurat(adata_seurat, counts = "counts", data = "logcounts")

seurat

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/rpy2/robjects/pandas2ri.py:263: DeprecationWarning: The global conversion available with activate() is deprecated and will be removed in the next major release. Use a local converter.

warnings.warn('The global conversion available with activate() '

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/rpy2/robjects/numpy2ri.py:205: DeprecationWarning: The global conversion available with activate() is deprecated and will be removed in the next major release. Use a local converter.

warnings.warn('The global conversion available with activate() '

/Users/luke.zappia/miniconda/envs/bp-integration/lib/python3.9/site-packages/anndata2ri/r2py.py:106: FutureWarning: X.dtype being converted to np.float32 from float64. In the next version of anndata (0.9) conversion will not be automatic. Pass dtype explicitly to avoid this warning. Pass `AnnData(X, dtype=X.dtype, ...)` to get the future behavour.

return AnnData(exprs, obs, var, uns, obsm or None, layers=layers)

An object of class Seurat

2000 features across 10270 samples within 1 assay

Active assay: originalexp (2000 features, 0 variable features)

8 dimensional reductions calculated: ATAC_gene_activity, ATAC_lsi_full, ATAC_lsi_red, ATAC_umap, GEX_X_pca, GEX_X_umap, PCA, UMAP

Unlike some of the other methods, we have seen which take a single object and a batch key, the Seurat integration functions require a list of objects. We create this using the SplitObject() function.

%%R -i batch_key

batch_list <- SplitObject(seurat, split.by = batch_key)

batch_list

$s1d3

An object of class Seurat

2000 features across 4279 samples within 1 assay

Active assay: originalexp (2000 features, 0 variable features)

8 dimensional reductions calculated: ATAC_gene_activity, ATAC_lsi_full, ATAC_lsi_red, ATAC_umap, GEX_X_pca, GEX_X_umap, PCA, UMAP

$s2d1

An object of class Seurat

2000 features across 4220 samples within 1 assay

Active assay: originalexp (2000 features, 0 variable features)

8 dimensional reductions calculated: ATAC_gene_activity, ATAC_lsi_full, ATAC_lsi_red, ATAC_umap, GEX_X_pca, GEX_X_umap, PCA, UMAP

$s3d7

An object of class Seurat

2000 features across 1771 samples within 1 assay

Active assay: originalexp (2000 features, 0 variable features)